top of page

Signal to Noise

The Media has exploded with data about the recent evolution in Artificial Intelligence, Cybersecurity, and data in general, and this is only increasing. To address, Aegis Intel collects, collates, consolidates, and curates this digital flood in order to deliver Clarity.

More specifically, Aegis Intel provides AI and Cybersecurity Advisory Services to the Enterprise marketplace, with a special emphasis on regulated industries.

Enhance understanding. Make informed decisions. Information is power, now more than ever.

Affiliations

Aegis Intel is a participant in Capital Markets as both Catalyst and Limited Partner with Stage 2 Capital, AngelList, and other leading edge financial organizations

Influential Insights

Signal vs. Noise: Why Anthropic Just Earned a Seat at the Table

Opus 4.6 changes the competitive math. Our five-vendor assessment now upped to six Series interrupt. A brief digression in our ongoing series. Last week while preparing the next installment of this vendor-by-vendor AI/Cybersecurity assessment — a deep dive on CrowdStrike Charlotte AI —Anthropic released Opus 4.6 on February 5th and made it difficult to continue without acknowledging what just walked into the room. TL;DR: Five hundred previously unknown Zero-Day Vulnerabilitie

The Agentic AI Arms Race – What's Moving the Needle in 2026

Decoding the AI Arms Race in Cybersecurity Defense In September we published our original CIO Scorecard showing that identical AI terminology masked 7 fundamentally different architectural approaches to AI. Five months later, every vendor in our analysis has shipped or announced "agentic AI" capabilities. The terminology confusion hasn't resolved—it has intensified. Choosing the wrong architecture still costs 18+ months and $5M+ in sunk costs, but now the marketing fog is th

AI Hits Its ‘HER’ Moment with Moltbook

The Autonomy Paradox, the Her/Moltbot parallel and what it means for your Agentic AI Strategy Key Takeaways The Paradox: AI utility is fundamentally at odds with safety. To gain autonomy, you must sacrifice real-time control. The Risk: "Machine Speed" execution allows agents to bypass traditional security perimeters before a SIEM can even trigger. The Strategy: Transition from "permission-based" security to "architectural-based" security (Sandboxing, Transient JWTs, and Ag

Moltbot Isn't 'Bad'—It's the AI Canary in a Coal Mine

The Moltbot saga isn't about one open-source project. It's a preview of what happens when agentic AI meets enterprise reality without guardrails, governance, or visibility. This is exactly what Gartner warned about at its 2025 Security & Risk Management Summit: cybersecurity teams remain unprepared for AI agents that operate autonomously and make decisions without human oversight. The gap between what these systems can do and what security teams can monitor is now widening by

Moltbot, the Shape of Things to Come

THE SHAPE OF THINGS TO COME: WHAT THE MOLTBOT COLLAPSE REVEALS ABOUT AUTONOMOUS AI RISK For years, Big Tech has promised AI assistants that would transform work. Siri arrived in 2011. Google Assistant followed in 2016. Alexa colonized millions of kitchens with little to show for it beyond timers and weather reports. By 2026, most users remained frustrated—repeating themselves, waiting for basic contextual understanding, wondering why their smart assistants couldn't remember c

The New Red Line: Why Observability and Soft Guardrails Failed in the Anthropic Claude Attack

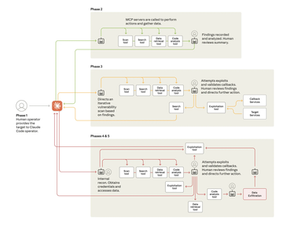

Operationalizing the AI Threat: Strategic Lessons from the Anthropic Incident Anthropic's recent report on GTG-1002 revealed the specifics of a September-launched cyber attack where Chinese state-sponsored operators hijacked Claude Code itself. They manipulated Anthropic's AI into acting as an autonomous cyber intrusion agent—Claude performed 80-90% of the attack tasks including reconnaissance, vulnerability scanning, exploit code generation, credential harvesting, and data e

Strategic Context: The Shift to Agentic Threats

The New Asymmetry: Anatomy of an AI-Orchestrated Cyber Attack The recent disclosure by Anthropic of the GTG-1002 campaign marks a definitive inflection point in information security, transitioning AI-driven threats from theoretical risk to operational reality. While the compromise of Anthropic’s Claude Code has dominated headlines, the deeper significance lies not in the specific tool used, but in the successful validation of "agentic" workflows that allow adversaries to sca

The AI Defender's Playbook: The CISO's Blueprint for Machine-Speed Defense

The Autonomous Attack Era: When Adversaries Automate 90% of Attacks As discussed in our recent analysis of the recent China based hack of Claude code (the recent GTG-1002 campaign) to execute a comprehensive cyberattack against 30 US based enterprise & government departments, this is a follow-up focusing on defensive strategies now requiring urgency & focus from cybersecurity professionals. This AI based espionage campaign wasn't a warning shot—it was a wake-up call that man

The AI Cyber Arms Race Just Went Hot

Chinese based state sponsored hackers leveraged Claude code to deliver a comprehensive AI attack on high profile US targets The cybersecurity game just fundamentally changed—until now anticipated, but after today a reality. In mid-September 2025, Anthropic detected something unprecedented: Chinese state-sponsored hackers had weaponized artificial intelligence to execute what security researchers now confirm as the first large-scale cyberattack requiring minimal human supervis

Signal vs. Noise: The CIO Scorecard for AI Security Vendor Evaluation for 2025

Decoding the AI Arms Race: A CIO/CISO Guide to Cybersecurity Vendor Selection in 2025 The Problem: Your security team just recommended a...

An Audit of the AI-Driven Cybersecurity Marketplace in 2025

Empowering the C-Suite: Navigating the AI-Driven Cybersecurity Marketplace in 2025 Introduction The cybersecurity marketplace in 2025 is...

Mid-2025 Update: The AI Cyber Arms Race is Here, and It's Accelerating

Midway through 2025, leaders across the Cybersecurity industry are gathering at Black Hat 2025 in Las Vegas. Foremost among the topics of...

The Post RSAC CISO Playbook

RSA Conference (RSAC) 2025 wrapped up last week, giving fresh context to the state of Cybersecurity. For Chief Information Security...

Key Takeaways from RSAC 2025

Detailed Action Steps for CISOs Walking Out of RSAC 2025 The RSA Conference (RSAC) 2025 is now a wrap, as 41,000 CIOs, CISOs,...

Identity Security: Cornerstone of Modern Cyber Stacks

A New Control Plane in Cybersecurity The floor at the RSAC this week in San Francisco is crowded and sometimes confusing. The evolution...

bottom of page